Artificial intelligence, or AI, has massive potential to be one of the most revolutionary forces in human history. Besides being the dream of every science fiction fanatic, it is already revolutionizing the world in which we live. This week while browsing through the happenings in the world of science, I found an article which caused me to turn my head and wonder just how close we are to the world of tomorrow where sentient robots are our coworkers, friends, and peers. While I’m well-aware these wild dreams are just the futurist in me getting riled up, that excitement isn’t totally unwarranted. We are advancing our collective knowledge of how to create AI at breakneck pace in recent years. Research published in Nature last week shows interesting progress in the field of AI that can apply both to neuroscience and to our understanding of how the so-called “deep learning” systems that underscore much of our AI technologies actually function.

Before I get further into my blog, its important to define two terms when discussing AI: weak AI and general artificial intelligence (AGI). Weak AI is artificial intelligence designed to accomplish or perform a specific task, while AGI refers to the what populates science fiction the world over – a thinking machine that can learn, adapt, and apply itself to whatever problem that is presented to it.

IBM’s Deep Blue, which famously beat chess grandmaster Garry Kasparov in 1996, is an older example of what the public knows as AI. These game playing computers became even more refined when AlphaGo from DeepMind beat multiple world champions in 2015 in the ancient Chinese game of Go, which is widely considered to be vastly more complex than chess. Though these might be textbook examples of weak AI, we are already surrounded by many, many ubiquitous forms of weak AI that we use daily. Siri, Google Assistant, and Amazon Alexa are taking the mundane tasks of our days, like shooting out a quick text, creating a grocery list, setting reminders, or posing a question to the internet. Companies like Tesla, Uber, and Google’s Waymo are continuing to push self-driving cars to the market. At the rate this technology is progressing, we are already expecting massive disruptions in the auto industry, from drivers to mechanics, and in city planning.

AI is even working its way into the field of education. Intelligent tutor systems, such as Carnegie Learning use AI systems to monitor the progress of students as they use the software and adapt to their learning styles to accommodate the needs of individual students inside the classroom and at home. Some expect systems like these to improve the future of online education and to allow teachers to act as coaches rather than the sole source of knowledge in the classroom. Some companies, such as Content Technologies, Inc., have introduced products like Cram101 or JustTheFacts101 to help students and teachers customize their study guides and textbooks for more time-effective learning. These systems might revolutionize the one-sized-fits-all nature of education to simultaneously help students with all learning styles to reach their full potentials. In conjunction with future VR technologies like the Global Learning Center (read more about VR here), who knows where the future of interactive, intelligent educational systems will take us.

As the world automates itself through increasingly sophisticated weak AI, many still only associate the term “artificial intelligence” with the likes of Data from Star Trek, C-3PO from Star Wars, or, more recently, Ava from Ex Machina. This version of AI, however, has still not yet been achieved, and, as recently explained by Google scientists at their I/O conference. That said, scientists are constantly chipping away at what makes intelligence tick by finding problems once thought to be the unique abilities of the living and tasking their programs to replicate our successes.

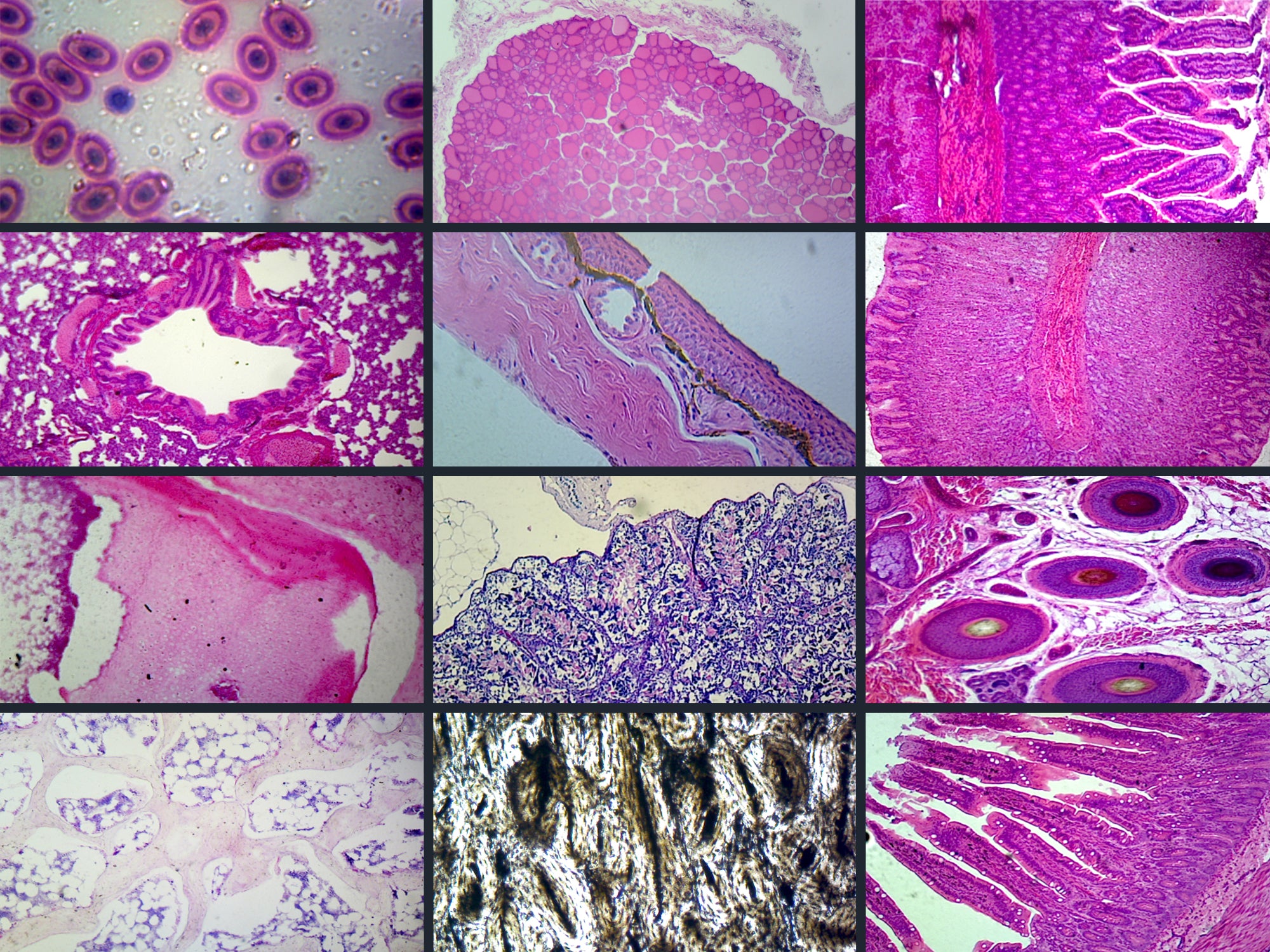

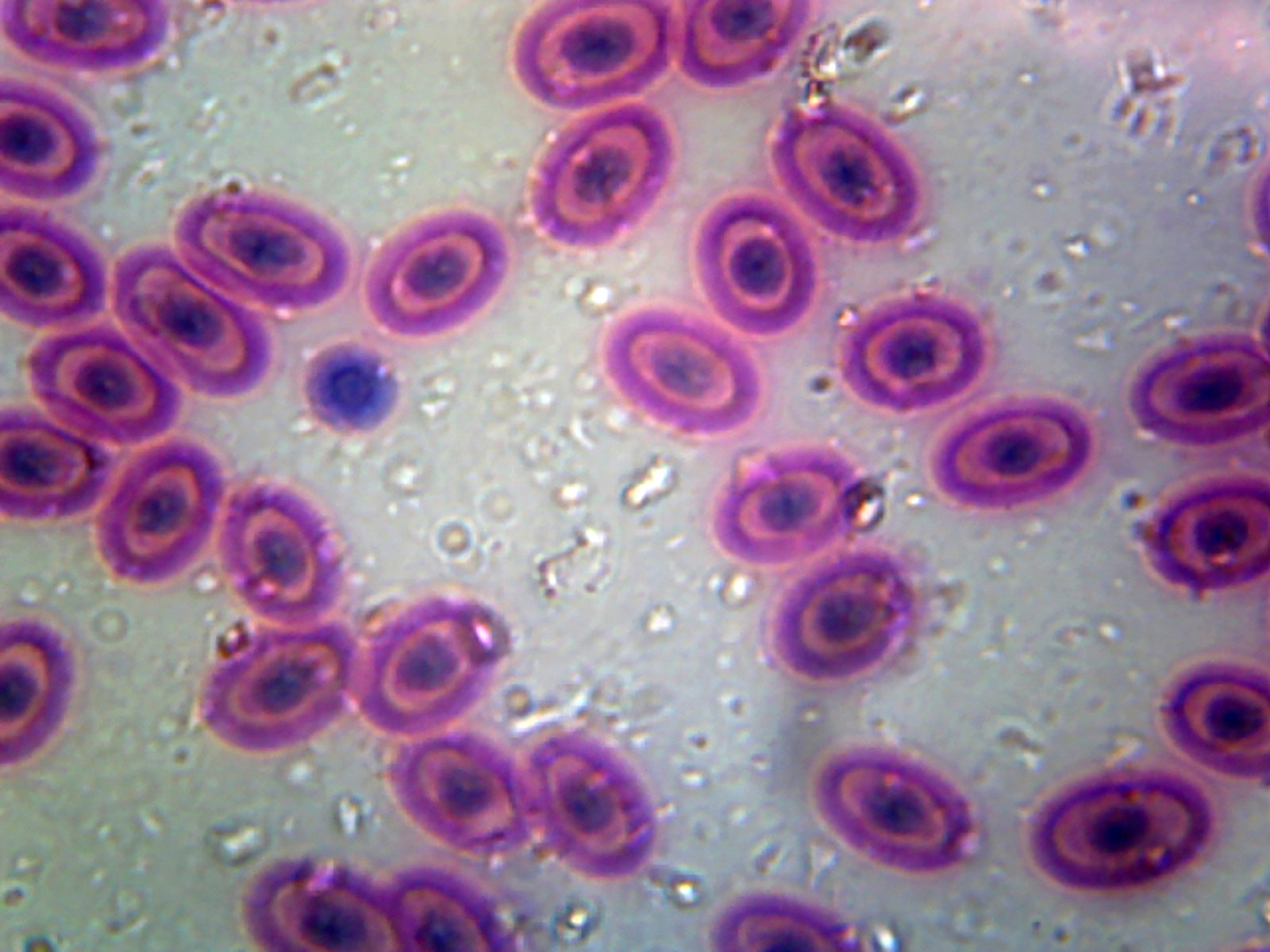

One such task is navigation. We, as living, breathing, thinking creatures, are intuitively able to navigate our environments without a second thought. We can remember routes, look for shortcuts, and calculate paths to get us to our destination, all without skipping a beat. In animal-life, we have found that certain cells called place cells, head-direction cells, and grid cells help us to navigate our way in the world. In an amazing new study coming out of DeepMind (the same company mentioned above that revolutionized AI with AlphaGo in 2015), scientists were able to design a deep learning system that spontaneously created grid-like navigational systems in its code that resemble the grid-cells we see in animal brains. These grid-cells essentially are used by our brains to overlay a grid-like geometry over our surroundings so that we can anticipate our movements and create the best possible path to take to get from A to B. With these deep learning systems that were able to develop grid-cell-like systems of their own, the artificially intelligent “agents,” as they are called, were able to beat human navigators at finding their goal in a maze. Furthermore, they were able to “turn off” these grid cells in their agents to confirm that they are an essential part in effective navigation. Another navigational component this study sheds light on is path integration, or our ability to calculate our current positions using our previous positions.

This study is remarkable for several reasons. Perhaps the most interesting, to me at least, is that a deep learning AI system came up with grid-like navigational patterns like what is seen in our own biology on its own accord. I am blown away that the system devised by a computer to find the most efficient means of navigation mirrors what took evolution ages to develop and perfect. This not only shows me the beauty and effectiveness of evolution, but that advanced AI technology might be more similar to us than we expect. Not being a scientist myself though, I know to keep this wonderment in check and to watch as the real experts find out how close AI thinking will mirror our own. At the very least, I think it speaks volumes about the effectiveness of our own navigational systems. This study is also interesting in terms of studying neuroscience alongside computer science. By having artificial systems that function like our own, we may be able to study cognitive processes in a virtual environment in ways that aren’t possible with living subjects. And lastly, this is simply yet another milestone in the progression of artificial intelligence. In recent years, I’ve been constantly reading about these milestones being reached, and I can’t help but think of what the next task we’ll figure out how to get an AI to perform at or above human-levels of efficiency.

AI and its place in society are poised to be some of the biggest ethical and philosophical debates of our time. People are already hard at work debating these issues. In an open letter, hundreds of scientists, including the likes of SpaceX’s Elon Musk and Apple’s Steve Wozniak, have urged the global community to develop a roadmap for future AI research that is not just comprehensive, but responsible and beneficial to humanity as well. While the future of AI is an intensely debated topic, I know for one thing that I am very intrigued by its progress and I cannot wait to watch the field grow.

Do you have any thoughts on the future of AI or the new research coming out of DeepMind? Please share in the comments below!

Written By: Jacob Monash